This is part 10 of a post series about Oracle Edition-Based Redefinition.

Visit the index page for all the parts of the series

Introduction

In a previous post I wrote about Editioning Views and their significant role in online application upgrades using EBR.

In this post we’ll see how editioning views are represented in the data dictionary views.

I’ll discuss only the USER_* views, but everything is true for the corresponding ALL_*, DBA_* and CDB_* views as well

Setup

At this point, after completing three upgrades already (in part 3, part 5 and part 9), our latest edition is V3, and we have the PEOPLE$0 table and two actual instances of the PEOPLE editioning view (one in V2 and one in V3).

Let’s create a new edition – V4:

-- connected as system

ORA$BASE> create edition v4;

Edition created.

ORA$BASE> grant use on edition v4 to demo_ebr;

Grant succeeded.

In the new edition, let’s change the PEOPLE editioning view and give different aliases to some of the columns:

ORA$BASE> conn demo_ebr

Enter password:

Connected.

ORA$BASE> @set_edition v4

Session altered.

V4> create or replace editioning view people as

2 select id,

3 first_name as given_name,

4 last_name as family_name,

5 phone_number,

6 eye_color

7 from people$0;

View created.

And let’s create also a regular view on top of PEOPLE:

V4> create view brown_eyed_people as

2 select *

3 from people

4 where eye_color = 'Brown';

View created.

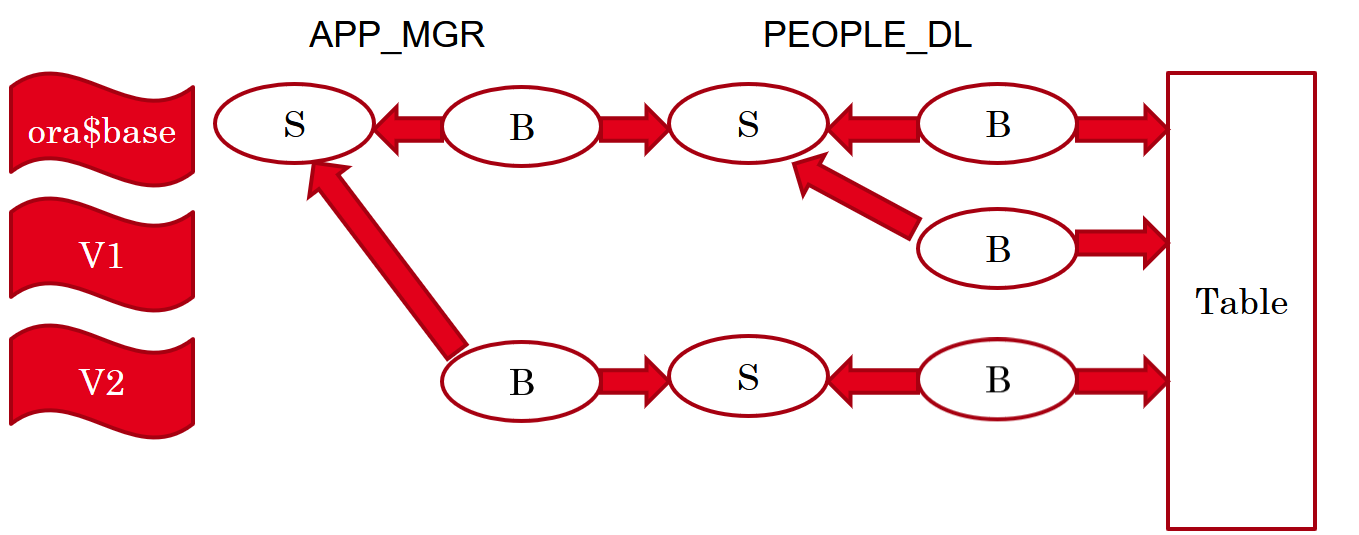

Note that the BROWN_EYED_PEOPLE regular view references the PEOPLE editioning view. As described and emphasized, only editioning views reference tables; all the other editioned objects (including regular views) should reference editioning views (or other regular views) only.

USER_OBJECTS

In USER_OBJECTS and in USER_OBJECTS_AE both regular views and editioning views appear simply as views (the OBJECT_TYPE column contains the value “VIEW”). Editioning views have no distinctive indication here.

V4> select object_type,

2 object_name,

3 edition_name

4 from user_objects

5 where object_type in ('TABLE', 'VIEW')

6 order by 1,2;

OBJECT_TYPE OBJECT_NAME EDITION_NAME

------------ ------------------------------ ------------

TABLE PEOPLE$0

VIEW BROWN_EYED_PEOPLE V4

VIEW PEOPLE V4

V4> select object_type,

2 object_name,

3 edition_name

4 from user_objects_ae

5 where object_type in ('TABLE', 'VIEW')

6 order by 1,2,3;

OBJECT_TYPE OBJECT_NAME EDITION_NAME

------------ ------------------------------ ------------

TABLE PEOPLE$0

VIEW BROWN_EYED_PEOPLE V4

VIEW PEOPLE V2

VIEW PEOPLE V3

VIEW PEOPLE V4

USER_VIEWS

In USER_VIEWS and in USER_VIEWS_AE there is a column – EDITIONING_VIEW – that tells whether a view is an editioning one or not:

V4> select view_name,

2 editioning_view

3 from user_views

4 order by 1;

VIEW_NAME EDITIONING_VIEW

-------------------- -----------------

BROWN_EYED_PEOPLE N

PEOPLE Y

V4> select view_name,

2 editioning_view,

3 edition_name

4 from user_views_ae

5 order by 1,3;

VIEW_NAME EDITIONING_VIEW EDITION_NAME

-------------------- ----------------- ------------

BROWN_EYED_PEOPLE N V4

PEOPLE Y V2

PEOPLE Y V3

PEOPLE Y V4

USER_EDITIONING_VIEWS

There is a dedicated data dictionary view – USER_EDITIONING_VIEWS – that contains only the editioning views.

For each editioning view we can see the view name and the name of the table covered by this view. Recall that (in a specific edition) an editioning view covers one and only one table, and a table may be covered by one editioning view at the most.

V4> select * from user_editioning_views;

VIEW_NAME TABLE_NAME

--------------- ---------------

PEOPLE PEOPLE$0

And there is a corresponding USER_EDITIONING_VIEWS_AE view:

V4> select * from user_editioning_views_ae;

VIEW_NAME TABLE_NAME EDITION_NAME

--------------- --------------- ------------

PEOPLE PEOPLE$0 V2

PEOPLE PEOPLE$0 V3

PEOPLE PEOPLE$0 V4

USER_EDITIONING_VIEW_COLS

There is a dedicated data dictionary view – USER_EDITIONING_VIEW_COLS – that contains for every editioning view all its columns and the corresponding projected columns from the covered table.

V4> select *

2 from user_editioning_view_cols

3 order by 1,2;

VIEW_NAME VIEW_COLUMN_ID VIEW_COLUMN_NAME TABLE_COLUMN_ID TABLE_COLUMN_NAME

--------------- -------------- -------------------- --------------- --------------------

PEOPLE 1 ID 1 ID

PEOPLE 2 GIVEN_NAME 2 FIRST_NAME

PEOPLE 3 FAMILY_NAME 3 LAST_NAME

PEOPLE 4 PHONE_NUMBER 4 PHONE_NUMBER

PEOPLE 5 EYE_COLOR 5 EYE_COLOR

There is also a corresponding USER_EDITIONING_VIEW_COLS_AE view. Let’s use it to see how the PEOPLE editioning view has evolved in the last 3 editions:

V4> select v2,v3,v4

2 from (select *

3 from user_editioning_view_cols_ae

4 where view_name = 'PEOPLE')

5 pivot(

6 max(view_column_name || nullif(' [' || nullif(table_column_name, view_column_name) || ']', ' []'))

7 for edition_name in ('V2' as v2, 'V3' as v3, 'V4' as v4)

8 )

9 order by view_column_id;

V2 V3 V4

-------------- -------------- -------------------------

ID ID ID

FIRST_NAME FIRST_NAME GIVEN_NAME [FIRST_NAME]

LAST_NAME LAST_NAME FAMILY_NAME [LAST_NAME]

PHONE_NUMBER PHONE_NUMBER PHONE_NUMBER

EYE_COLOR EYE_COLOR

For other parts of the EBR series, please visit the index page.